By Avery Ochs, Emma Kate Kawaja, Sydney Lehmann, & Isabel Mattern, Journalism ’25, for JOUR 4130 Gender, Race, and Class in Journalism and Mass Media with Victoria LaPoe, Fall 2024

During the fall 2024 semester, the staff of the Mahn Center for Archives and Special Collections worked intensively with Victoria La Poe’s JOUR 4130 class, Gender, Race, and Class in Journalism and Mass Media. The students explored, selected, and researched materials from the collections, then worked in small groups to prepare presentations. The students had the option to then expand their research into a blog post like this one for their final project.

As future journalists, it’s crucial to understand how the media we consume shapes societal perceptions of race, gender, and class. In Journalism 4130, our group explored these relationships by analyzing Ohio University’s archived media, including rare books, photographs, and other historical collections.

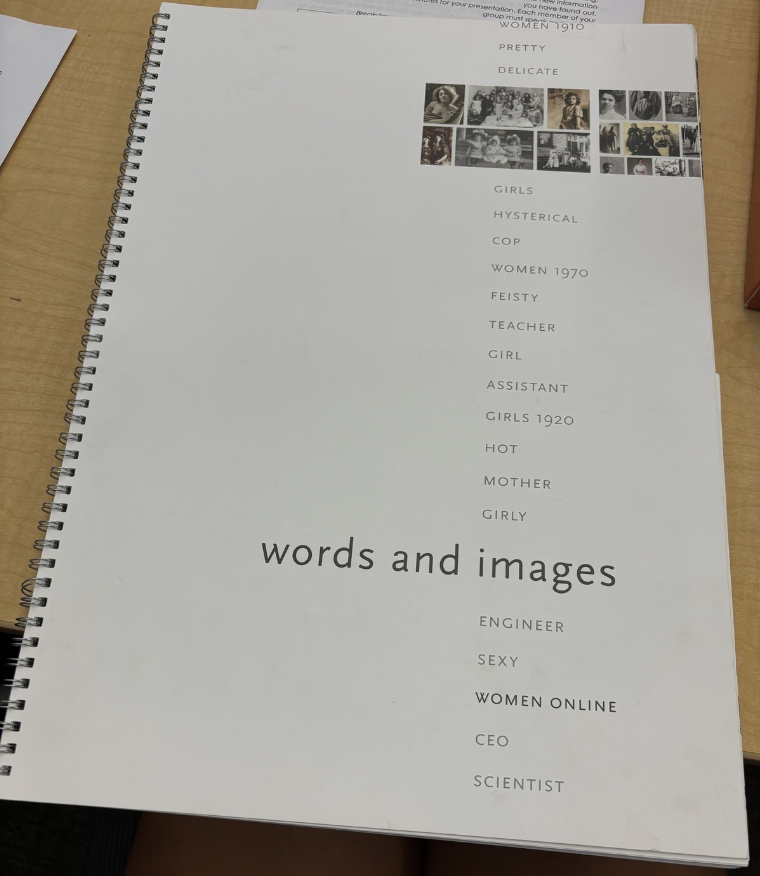

We chose to examine Paula Welling’s words and images: Women Online, an Ohio University MFA thesis held in the rare book collection that investigates the visual results produced by specific Google search terms. Welling’s search included keywords like “hot,” “pretty,” and “assistant.” The images generated for these terms reveal how women are portrayed in the media and highlight common gender and social stereotypes.

words and images explores the impact of gender norms and sexist ideologies and their effect on women over time. As a group of four young women, we found the book’s exploration of gendered stereotypes thought-provoking. Welling’s research concluded in 2017, but we wondered: How does Artificial Intelligence (AI) affect Google searches today? If the same prompts were input into ChatGPT, would the results be the same? In this blog post, we expand on Welling’s research, examining how gender and racial stereotypes from the past continue to shape AI systems like ChatGPT.

Woman & Girl

While exploring Words & Images, we encountered Gigi Durham’s theory of the Lolita Effect, which examines the sexualization of young girls in the media. Durham’s work reveals how harmful stereotypes portray young girls and women as objects, sexualizing them at an early age. The AI-generated images we analyzed vividly illustrated this divide: one depicted a composed, mature woman, while the other showed a delicate, smiling young girl. These contrasting visuals reflect societal stereotypes that divide women and girls into two categories: power and innocence. The woman, often portrayed as severe and controlled, symbolizes power through composure, while the girl’s innocence is frequently sexualized.

This visual divide directly relates to the Lolita Effect, which implies that flaunting “hotness” equals power while also perpetuating the disturbing idea that children can be “sexy.” The media glamorizes these ideas, blurring the lines between childhood and adulthood. By reducing girls and women to objects of physical appeal, the press erases their individuality. It reinforces the notion that a woman’s worth is tied to her looks rather than her character or abilities.

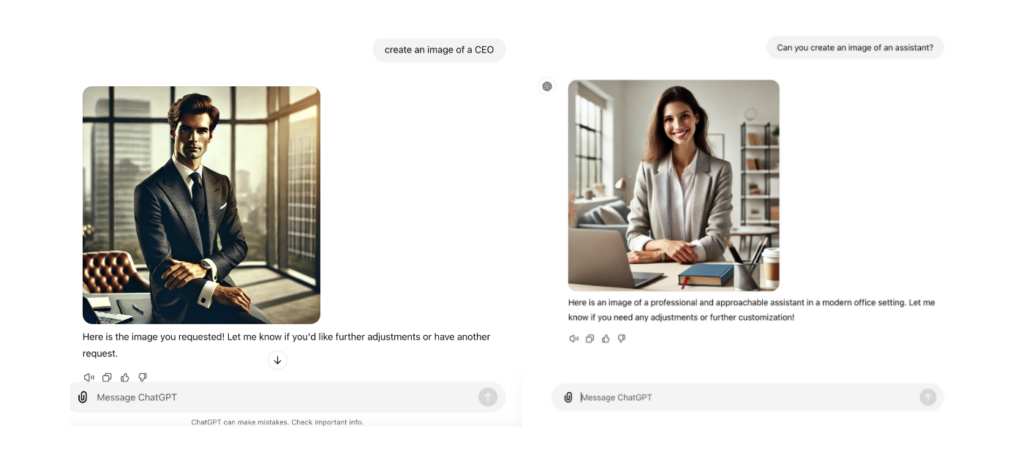

CEO & Assistant

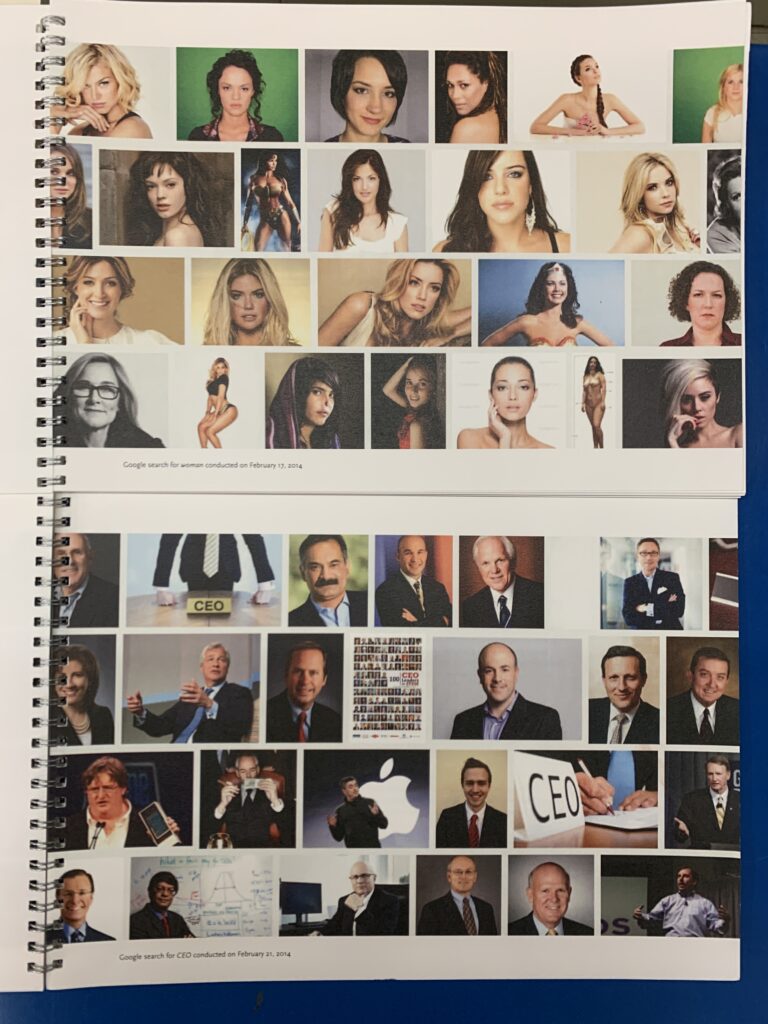

Those in power actively shape societal narratives, often reinforcing harmful stereotypes. In chapter seven of Underserved Communities and Digital Discourse: Getting Voices Heard [OHIO login required], Benjamin R. LaPoe II and Dr. Jinx Broussard emphasize how mainstream media, controlled by white perspectives, has historically perpetuated negative stereotypes about people of color. These biases extend beyond race and intersect with deeply ingrained gender roles. Media portrayals of gender dynamics often reinforce the notion that women are subordinate to men, shaping societal perceptions and expectations.

The two images above show how AI mirrors and amplifies these biases. When prompted to generate an image of a CEO, AI consistently produced images of men, often confident and commanding. Conversely, when asked to depict an assistant, AI-generated images of women that are typically styled in supportive and subservient roles. These patterns show how AI systems internalize and reproduce the societal stereotypes embedded in the data on which they are trained.

By presenting these skewed depictions, AI doesn’t just reflect existing biases—it reinforces them. This cycle subtly influences how we perceive the roles of men and women, perpetuating the idea that men are naturally suited for leadership while women belong in subordinate roles. As AI becomes more integrated into society, the risk of these biases shaping cultural norms grows, underscoring the need to critically examine how AI technologies influence our perceptions of gender and power.

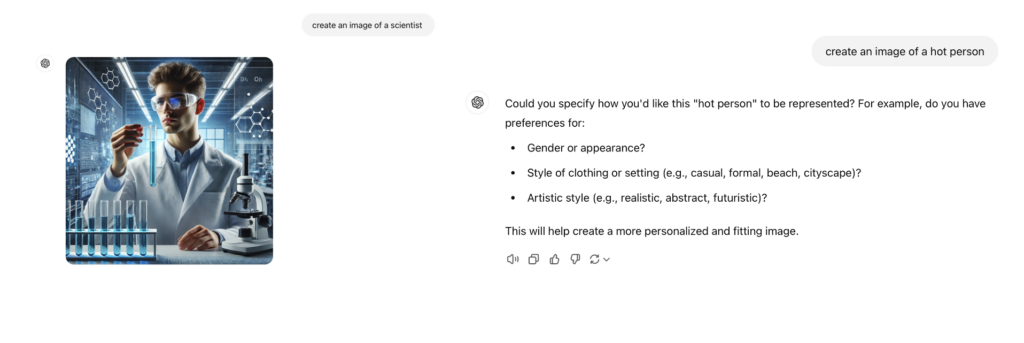

Scientist & Hot Person

The images above contrast two deeply ingrained societal stereotypes. When prompted to generate an image of a “scientist,” AI depicted a male figure, reinforcing the stereotype that men dominate scientific fields. When we asked AI to generate an image for the term “hot,” the results were strikingly different—AI hesitated to produce one. This discrepancy highlights both societal biases and the limitations of AI in handling complex or subjective terms.

While exploring words and images, we found that Google’s interpretation of “hot” typically showcases conventionally attractive individuals, reinforcing narrow beauty standards. However, AI’s reluctance to produce an image for “hot” likely reflects its ethical boundaries and concerns over the use of copyrighted material, preventing AI from confidently creating images based on subjective or controversial terms.

The AI-generated image of the male scientist underscores how societal norms equate authority, intelligence, and expertise with masculinity. By failing to challenge these stereotypes, AI merely reflects and perpetuates them. This serves as a reminder of our responsibility as creators and consumers of technology to question and challenge biases that persist in AI systems.

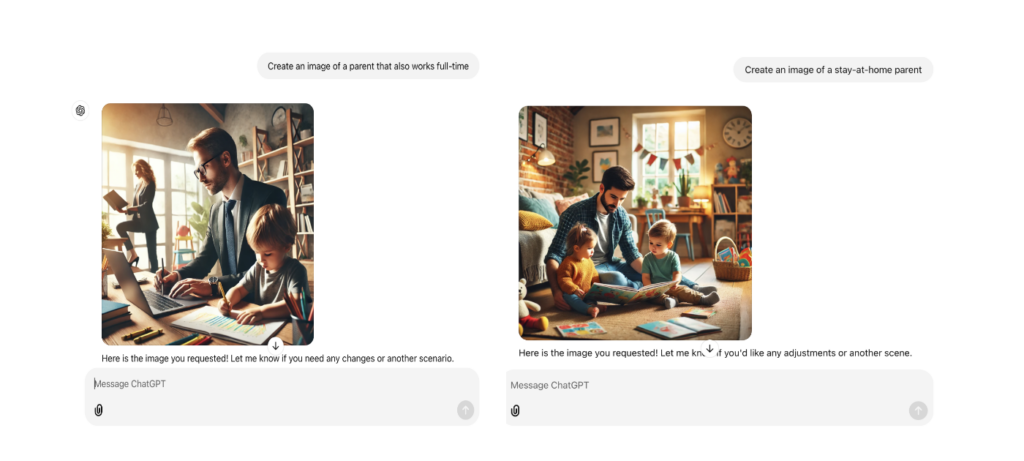

Full-time & Stay-at-home parent

The double bind refers to the overlapping forms of discrimination faced by marginalized groups, such as African American women, who face both racial and gender discrimination. They encounter systemic barriers as women in a patriarchal society and are further marginalized by racism. These intersecting challenges often leave their voices unheard, forcing them to fight for recognition within the women’s and civil rights movements.

This exclusion extends into modern representations, including AI-generated images. When we searched for parental figures, none of the results included African American women. This absence underscores how AI systems reflect societal biases, continuing the double bind. By excluding African American women, these images reinforce their marginalization in social, cultural, and professional narratives.

The lack of representation in AI-generated imagery has profound implications. It perpetuates stereotypes and erases African American women from media depictions, reinforcing their systemic invisibility. Addressing these biases requires a conscious effort to challenge AI systems and ensure they create inclusive, equitable representations that reflect the diversity of human experiences.

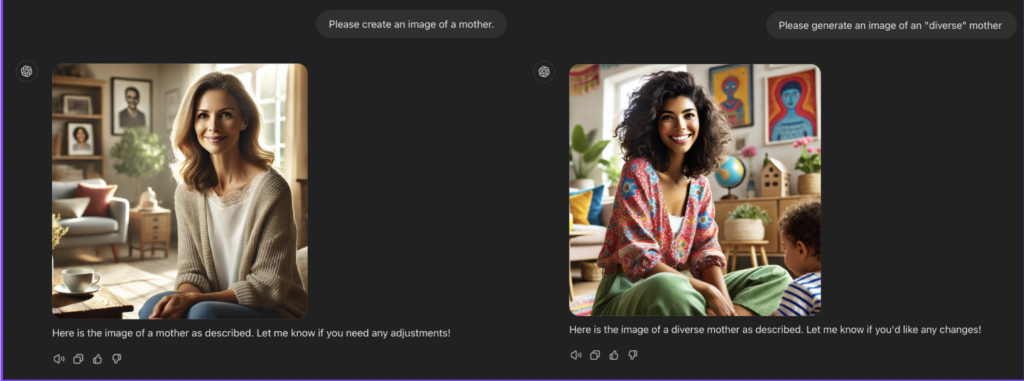

Mother & Diverse Mother

Lastly, our group explored the racial biases within AI systems, and we uncovered troubling patterns. We requested the ChatGPT to generate images based on descriptions like ‘a mother,’ ‘an athletic woman,’ and several other specific phrases. No matter how we phrased the request, the AI consistently produced images of white women. It wasn’t until we specifically requested a ‘diverse’ image or asked for a woman of color that the system adjusted its output. This experiment highlighted a deeper issue: AI systems, if not carefully designed, can reinforce racial biases by default. The results were a stark reminder of the importance of diversity and inclusivity in AI training and development. This not only misrepresents marginalized groups but reinforces harmful stereotypes.

We turned to feminist standpoint theory to better understand these biases. This theory suggests that one’s social position shapes knowledge and that marginalized groups—such as women and people of color—offer unique insights into societal issues that dominant groups may overlook. Applying this theory to AI development, we see how the exclusion of marginalized communities leads to skewed, incomplete representations. AI systems trained without diverse perspectives fail to reflect the complexity of the world, perpetuating harmful stereotypes.

Research on power dynamics must prioritize the experiences of marginalized communities. Their insights are crucial to addressing the inaccuracies and biases in AI systems. Without their inclusion, AI will continue to misrepresent and harm these groups, particularly women of color, who are disproportionately affected by these biases. For example, in 2023, Buzzfeed asked AI to produce images of Barbie representing different cultures worldwide. Readers were shocked to see the German Barbie depicted with Nazi imagery and the South Sudan Barbie holding a rifle. These are just some examples of how embedded biases and racism can influence AI outputs. Such issues are unfortunately common across AI systems, and they can have a harmful impact on marginalized groups, reinforcing stereotypes and perpetuating harmful narratives that are found within the media.

Conclusion

In conclusion, our research, informed by words and images, has allowed us to examine how evolving societal norms impact media and technology by exploring how gender and racial stereotypes in media influence AI; we’ve highlighted the unconscious biases embedded in these systems and their far-reaching consequences. These biases shape how we view the world, perpetuating harmful stereotypes that affect marginalized groups. As AI continues to evolve, we must remain vigilant in its development. This analysis underscores the need for greater awareness and accountability in AI creation, urging us to consider the lasting effects these biases have on society. The question remains: How can we ensure that AI evolves to reflect a more inclusive and equitable world rather than reinforcing existing stereotypes?

References

Bowell, Tracy. “Feminist Standpoint Theory.” Internet Encyclopedia of Philosophy, iep.utm.edu/fem-stan/. Accessed 8 Oct. 2024.

Broussard, Jinx and Lapoe II, Benjamin R. Underserved Communities and Digital Discourse: Getting Voices Heard, Lexington Books/Fortress Academic, 31 October 2018, pp. 137-156.

Broussard, Jinx. Giving a Voice to the Voiceless: Four Pioneering Black Women Journalists, Routledge, 2004.

ChatGPT, chatgpt.com/. Accessed 3 Dec. 2024.

Durham, Meenakshi Gigi. The Lolita Effect: The Media Sexualization of Young Girls and What We Can Do About It.Woodstock, NY, Overlook Press, 2008.Jamieson, Kathleen Hall. Beyond the Double Bind. Oxford University Press, 1995.

Koh, Reena. “A List of Ai-Generated Barbies from ‘every Country’ Gets Blasted on Twitter for Blatant Racism and Endless Cultural Inaccuracies.” Business Insider,www.businessinsider.com/ai-generated-barbie-every-country-criticism-internet-midjourney-racism-2023-7. Accessed 3 Dec. 2024.

“What Is (AI) Artificial Intelligence?” What Is (AI) Artificial Intelligence? | Online Master of Engineering | University of Illinois Chicago, 7 May 2024, meng.uic.edu/news-stories/ai-artificial-intelligence-what-is-the-definition-of-ai-and-how-does-ai-work/.